Why Neuromorphic Computing Matters in 2025

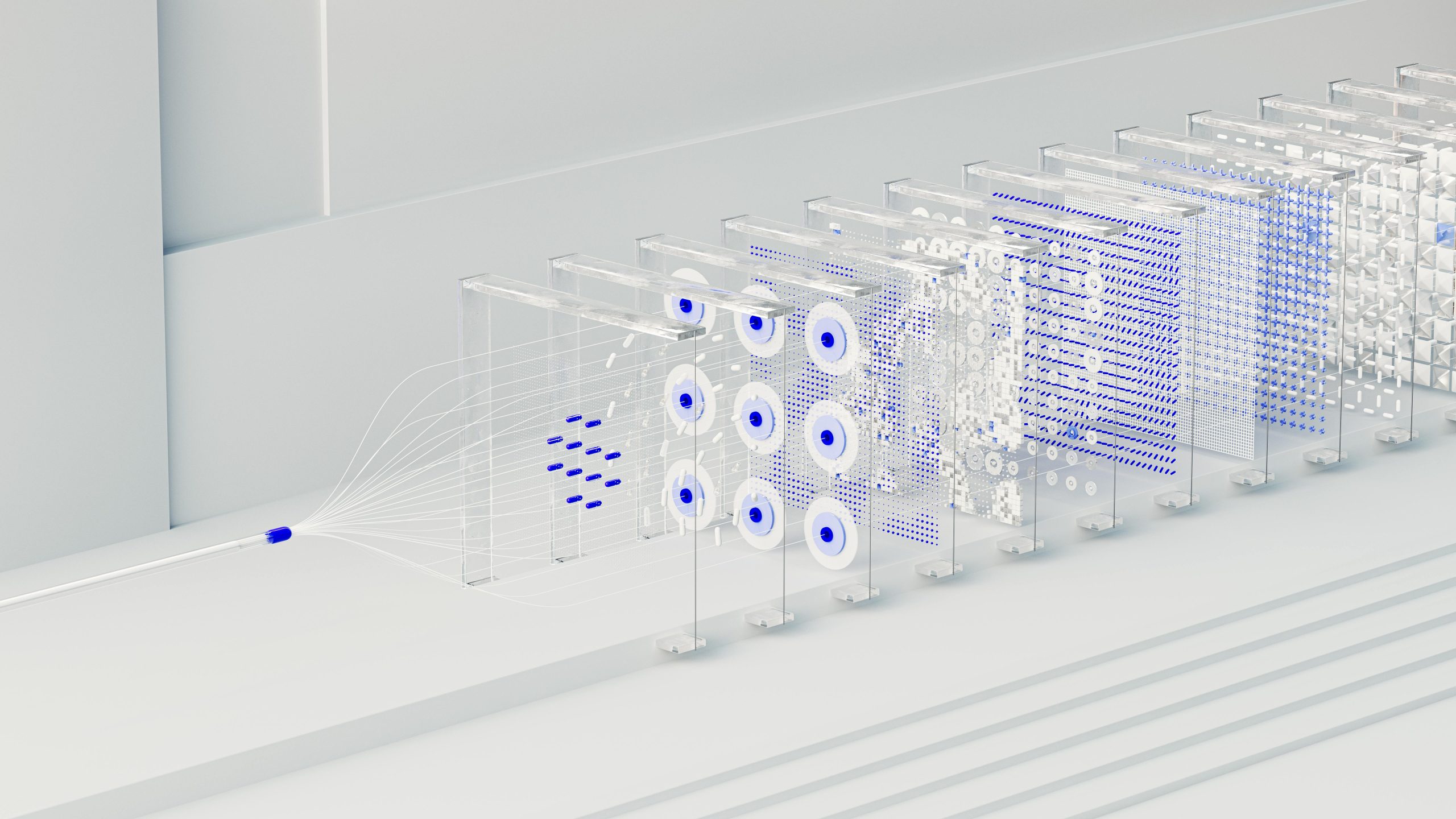

With the rapid development of technology, neuromorphic computing has emerged as a breakthrough that replicates the neural networks of the human brain to facilitate computation. In 2025, the technology is creating huge waves because it has the potential to shatter the boundaries of conventional computing systems, especially in terms of speed and power consumption. Neuromorphic computing is pertinent in 2025 since it solves the escalating energy crisis of artificial intelligence (AI), achieves real-time processing requirements in mission-critical applications, and drives the shift to edge AI.

This blog post elaborates on the significance of neuromorphic computing through its energy efficiency, ability to process in real-time, application in edge AI, and effect on industries in the real world.

Energy Efficiency: A Game-Changer for AI

One of the strongest benefits of neuromorphic computing is that it is energy efficient. With increasingly sophisticated AI systems being deployed, power consumption has soared to mind-boggling heights. The energy wastage inherent in repeated data transmission through decoupled memory and processing in traditional von Neumann architecture is only fueling the fire. Data centers are forecast to consume 20% of the world’s electricity supply by 2025 (UCL Policy Brief), and as such, energy-efficient options are a necessity.

Neuromorphic computing integrates processing and memory, mimicking the brain’s ever-present neuron-synapse interfaces. Neuromorphic computing, this in-memory way of operating, eliminates wasteful data movement, saving energy by a large margin. It takes about 50 gigawatt-hours (GWh) of electricity, for example, to train sophisticated AI models like GPT-4. Neuromorphic chips would reduce that to well below 1% of the amount, and render AI green (DevTech Insights).

In addition, neuromorphic systems utilize spiking neural networks (SNNs), where neurons spike only when needed, but another energy feature. Not like typical processors that operate continuously with power, this event-driven approach allows neuromorphic chips to execute at milliwatts, appropriate for battery-powered devices like IoT sensors, that can operate for years without needing a recharge (ZDNet).

| Aspect | Traditional Computing | Neuromorphic Computing |

|---|---|---|

| Energy Consumption | High (e.g., 28 MW for supercomputers) | Low (e.g., milliwatts for IoT) |

| Processing-Memory Design | Separate (von Neumann bottleneck) | Integrated (in-memory computing) |

| Activation Model | Continuous operation | Event-driven (SNNs) |

Real-Time Processing: Enabling Timely Decisions

Real-time processing of neuromorphic computing is very important for real-time applications of 2025. Conventional systems become slow with the von Neumann bottleneck, where data keeps flowing back and forth between the CPU and memory. Delays can become issues for instant-decision applications like autonomous driving or medical diagnosis.

Neuromorphic chips, inspired by the brain’s model for processing, perform sophisticated operations with minimal latency. Intel’s Loihi chip, for instance, responds within microseconds, while GPUs respond after milliseconds (DevTech Insights). This speed is essential for autonomous vehicle collision avoidance, where any delay would be dangerous. Mercedes-Benz applies neuromorphic tech in 0.1ms latency, for instance, to enhance vehicle safety.

In healthcare, neuromorphic computing supports fast processing of electroencephalography (EEG) signals at 95% accuracy to predict epileptic seizures (DevTech Insights). Lives are saved, and early interventions are made. Edge AI also comes into play in robotics and drones, where quick navigation and interaction with the environment are essential.

Edge AI: Intelligence at the Device Level

Neuromorphic computing is best leveraged in edge AI, where processing happens locally on the device and not on the cloud. The shift to edge AI is increasing by 2025 because enterprises need faster, more personalized, and optimized AI. Neuromorphic chips, low power and energy-intensive, allow sophisticated AI calculations on power-constrained devices such as smartphones, wearables, and IoT sensors.

For instance, Qualcomm’s Zeroth platform integrates the principles of neuromorphics to enable mobile and IoT on-device processing (DevTech Insights). It enables real-time applications such as facial recognition and natural language comprehension without the need for ongoing internet connectivity. 78% of businesses value edge AI with neuromorphic hardware since it is efficient and privacy-friendly, according to a 2025 McKinsey report.

Edge AI also guarantees improved data security through the local processing of sensitive data, eliminating chances of data leakages via exposure to the network. This is extremely suitable for healthcare wearables and smart home devices, where user privacy must be ensured.

| Application | Edge AI Benefit | Neuromorphic Advantage |

|---|---|---|

| Facial Recognition | Improved privacy | Low-power, real-time processing |

| Smart Home Devices | Reduced latency | No dependence on cloud |

| Healthcare Wearables | Secure processing of data | Efficient on low power |

Industry Applications: Real-World Impact

Neuromorphic computing is transforming industries with powerful, real-time solutions. In medicine, research at the Mayo Clinic has utilized neuromorphic chips to decipher EEG data at 95% accuracy for epileptic seizure forecasting, enabling interventions early on (DevTech Insights). It shows the potential of the technology to better treat patients.

Neuromorphic computing improves automotive safety features. Mercedes-Benz utilizes it in its collision prevention systems with a latency of 0.1ms, significantly less than in earlier systems (DevTech Insights). It allows for more trustworthy autonomous vehicles.

Neuromorphic computing will assist agriculture with low-power soil sensors capable of reporting for 10 years on one battery. This allows for accurate irrigation and maximum crop yield, which eliminates sustainability issues (DevTech Insights).

There are other robotics applications, including neuromorphic systems dominating gesture recognition and locomotion, and scientific computing, where they are working on difficult challenges like graph partitioning and epidemic modeling (Nature Computational Science).

Conclusion: The Future of Neuromorphic Computing

With the evolution of neuromorphic computing, its place in 2025 is humongous. Energy efficiency addresses the energy crisis of AI, and real-time computing powers leading applications. Edge AI, enabled by neuromorphic chips, is revolutionizing the dynamics of device capability, making devices smart and safe. At a growth rate of 108% CAGR by 2025, anticipated, neuromorphic computing will set the pillar of technological expansion (DevTech Insights).

Looking ahead, ongoing work such as Intel’s Loihi and IBM’s TrueNorth holds even more promise. Mimicking the brain’s flexibility and efficiency, neuromorphic computing opens new pathways to AI, robotics, and beyond to an even more intelligent and sustainable future.